Introduction

Service Discovery is not new technology. Unfortunately, it is barely understood and rarely implemented. It is a problem that many system architects face and it is key to multiple desirable qualities of a modern, cloud enabled, elastic distributed system such as reliability, availability, maintainability. There are multiple ways to approach service discovery:

- Hardcode service locations;

- Develop proprietary solution;

- Use existing technology.

Hardcoding is still the common case. How often do you encounter hardcoded URLs in configuration files?! Developing proprietary solution becomes popular too. Multiple companies decided to address Service Discovery by implementing some sort of distributed key-value store. Amongst the popular ones: Etsy’s etcd, Heroku’s Doozer, Apache ZooKeeper, Google’s Chubby. Even Redis can used for such purposes. But for many cases additional software layers and programming complexity is not needed. There is already existing solution based on DNS. It is called DNS-SD and is defined in RFC6763.

DNS-SD utilizes PTR, SRV and TXT DNS records to provide flexible service discovery. All major DNS implementations support it. All major cloud providers support it. DNS is well established technology, well understood by both Operations and Development personnel with strong support in programming languages and libraries. It is highly-available by replication.

How does DNS-SD work?

DNS-SD uses three DNS records types: PTR, SRV, TXT:

- PTR record is defined in RFC1035 as “domain name pointer”. Unlike CNAME records no processing of the contents is performed, data is returned directly.

- SRV record is defined in RFC2782 as “service locator”. It should provide protocol agnostic way to locate services, in contrast to the MX records. It contains four components: priority, weight, port and target.

- TXT record is defined in RFC1035 as “text string”.

There are multiple specifics around protocol and service naming conventions that are beyond the scope of this post. For more information please refer to RFC6763. For the purposes of this article, it is assumed that a proprietary TCP-based service, called theService that has different reincarnations runs on TCP port 4218 on multiple hosts. The basic idea is:

- Create a pointer record for _theSerivce that contains all available incarnations of the service;

- For each incarnation create SRV record (where the service is located) and TXT record (any additional information for the client) that specify the service details.

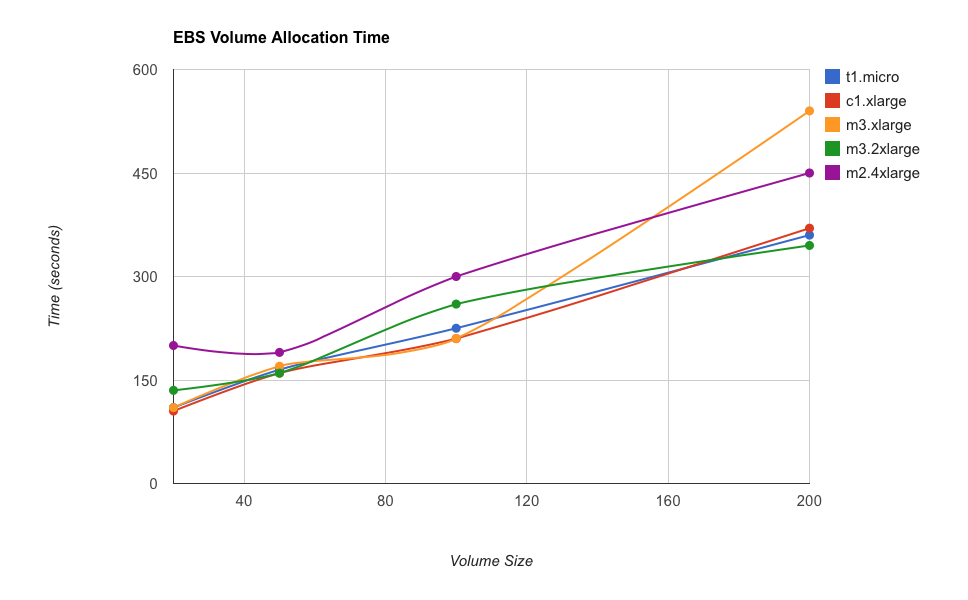

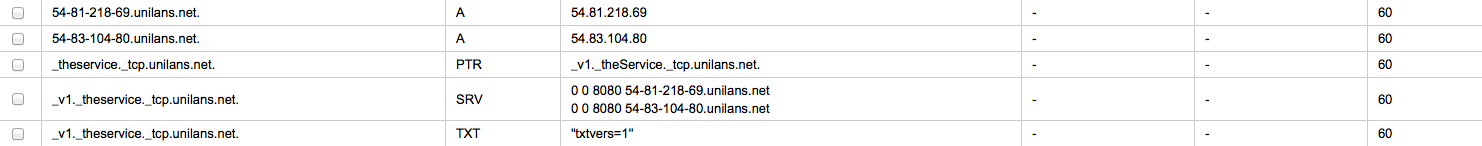

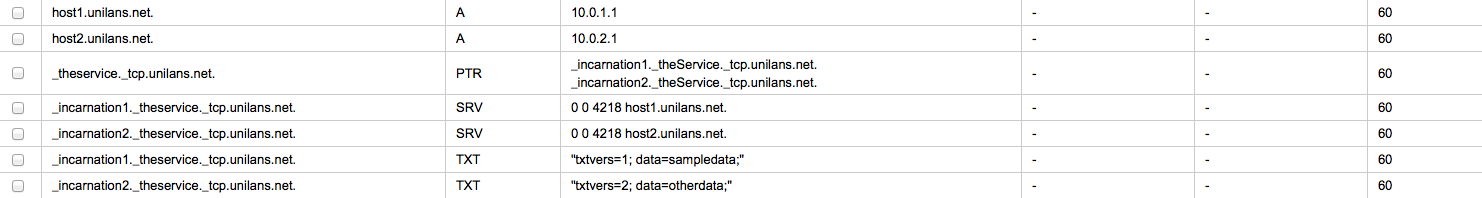

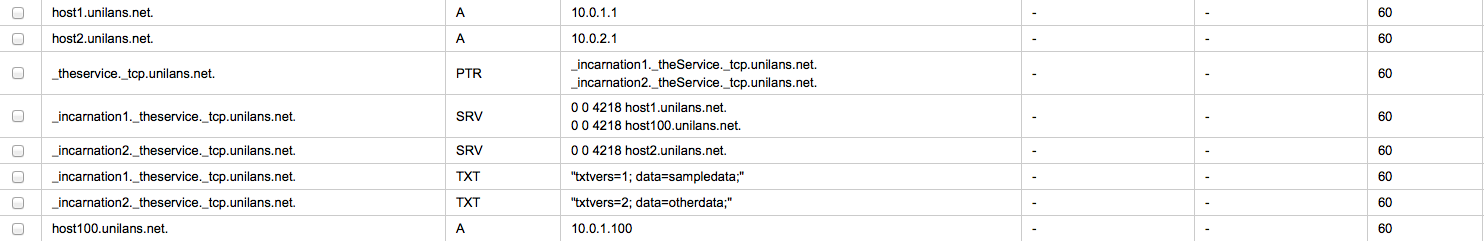

This is what sample configuration looks like in AWS Route53 for the unilans.net. domain:

Using nslookup results can be verified:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

:~> nslookup -q=PTR _theService._tcp.unilans.net. Server: 8.8.8.8 Address: 8.8.8.8#53 Non-authoritative answer: _theService._tcp.unilans.net name = _incarnation1._theService._tcp.unilans.net. _theService._tcp.unilans.net name = _incarnation2._theService._tcp.unilans.net. Authoritative answers can be found from: :~> nslookup -q=any _incarnation1._theService._tcp.unilans.net. Server: 8.8.8.8 Address: 8.8.8.8#53 Non-authoritative answer: _incarnation1._theService._tcp.unilans.net text = "txtvers=1\; data=sampledata\;" _incarnation1._theService._tcp.unilans.net service = 0 0 4218 host1.unilans.net. Authoritative answers can be found from: :~> |

Now a client that wants to use incarnation1 of theService has means to access it (Host: host1.unilans.net, Port: 4218).

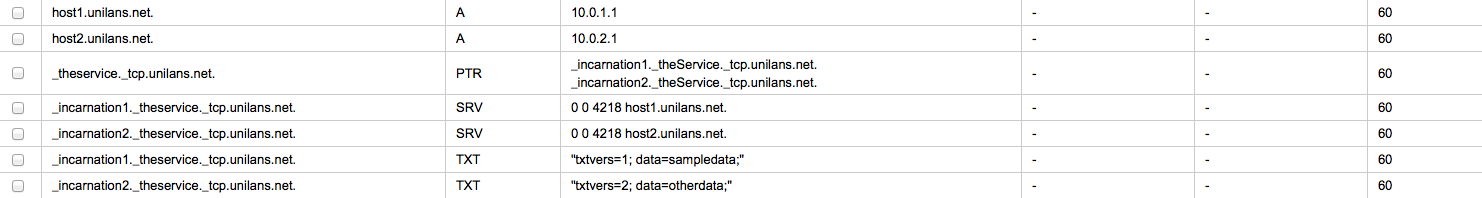

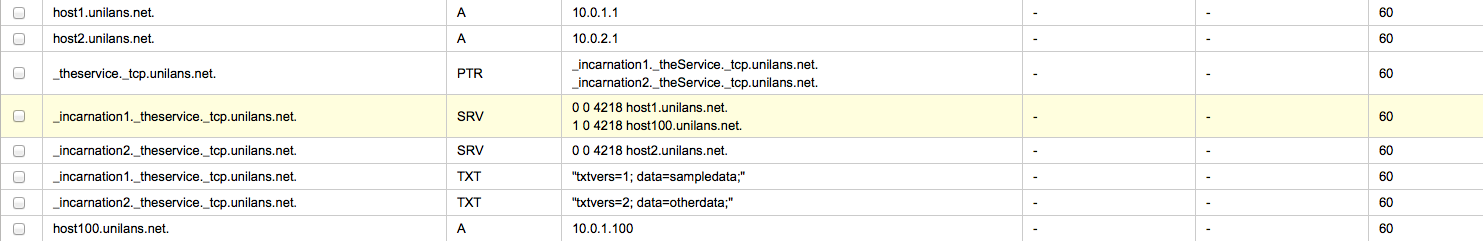

Load-balaing can be implementing by adding another entry in the service locator record with the same priority and weight:

Resulting DNS lookup:

|

|

:~> nslookup -q=any _incarnation1._theService._tcp.unilans.net. Server: 8.8.8.8 Address: 8.8.8.8#53 Non-authoritative answer: _incarnation1._theService._tcp.unilans.net text = "txtvers=1\; data=sampledata\;" _incarnation1._theService._tcp.unilans.net service = 0 0 4218 host1.unilans.net. _incarnation1._theService._tcp.unilans.net service = 0 0 4218 host100.unilans.net. Authoritative answers can be found from: :~> |

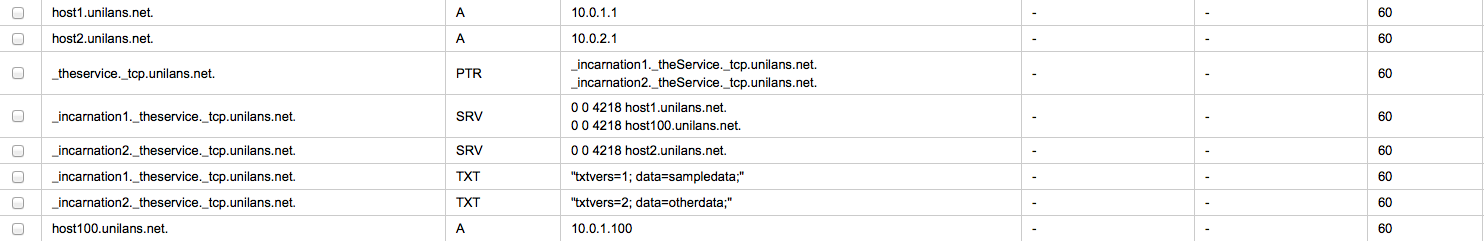

In a similar way, fail-over can be implemented by using different priority (or load distribution using different weights):

Resulting DNS lookup:

|

|

:~> nslookup -q=any _incarnation1._theService._tcp.unilans.net. Server: 8.8.8.8 Address: 8.8.8.8#53 Non-authoritative answer: _incarnation1._theService._tcp.unilans.net text = "txtvers=1\; data=sampledata\;" _incarnation1._theService._tcp.unilans.net service = 0 0 4218 host1.unilans.net. _incarnation1._theService._tcp.unilans.net service = 1 0 4218 host100.unilans.net. Authoritative answers can be found from: :~> |

NOTE: With DNS the client is the one to implement the load-balacing or the fail-over (although there are exceptions to this rule)!

Benefits of using DNS-SD for Service Discovery

This technology can be used to support multiple version of a service. Using the built-in support for different reincarnations of the same service, versioning can be implemented in clean granular way. Common problem in REST system, usually solved by nasty URL schemes or rewriting URLs. With DNS-SD required metadata can be passed through the TXT records and multiple versions of the communication protocol can be supported, each in contained environment … No name space pollution, no clumsy URL schemes, no URL rewriting …

This technology can be utilized to reduce complexity while building distributed systems. The clients will most certainly go through the process of name resolution anyway, so why not incorporate service discover in it?! Instead of dealing with external system (installation, operation, maintenance) and all the possible issues (hard to configure, hard to maintain, immature, fault-intollerant, requires additional libraries in the codebase, etc), incorporate this with the name resolution. DNS is well supported on virtually all operating systems and with all programming languages that provide network programming abilities. System architecture complexity is reduced because subsystem that already exists is providing additional services, instead of introducing new systems.

This technology can be utilized to increase reliability / fault-tolerance. Reliability / fault-tolerance can be easily increased by serving multiple entries with the service locator records. Priority can be used by the client to go through the list of entries in controlled manner and weight to balance the load between the service providers on each priority level. The combination of backend support (control plane updating DNS-SD records) and reasonably intelligent clients (implementing service discovery and priority/weight parsing) should give granular control over the fail-over and load-balancing processes in the communication between multiple entities.

This technology supports system elasticity. Modern cloud service providers have APIs to control DNS zones. In this article, AWS Route53 will be used to demonstrate how elastic service can be introduced through DNS-SD to clients. Backend service scaling logic can modify service locator records to reflect current service state as far as DNS zone modification API is available. This is just part of the control plane for the service …

Bonus point: DNS also gives you simple, replicated key-value store through TXT records!

Implementation of Service Discovery with DNS-SD, AWS Route53, AWS IAM and AWS EC2 UserData

Following is a set of steps and sample code to implement Service Discovery in AWS, using Route53, IAM and EC2.

Manual configuration

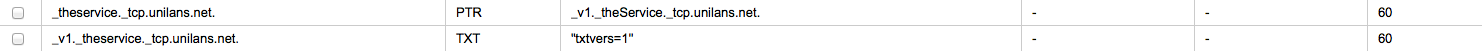

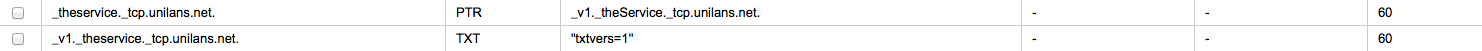

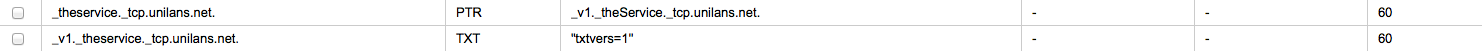

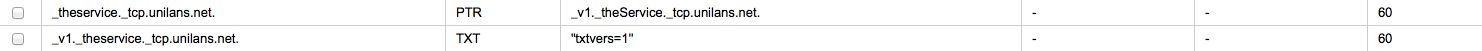

1. Create PTR and TXT Records for theService in Route53:

This is a simple example for one service with one incarnation (v1).

NOTE: There is no SRV since the service is currently not running anywhere! Active service providers will create/update/delete SRV entries.

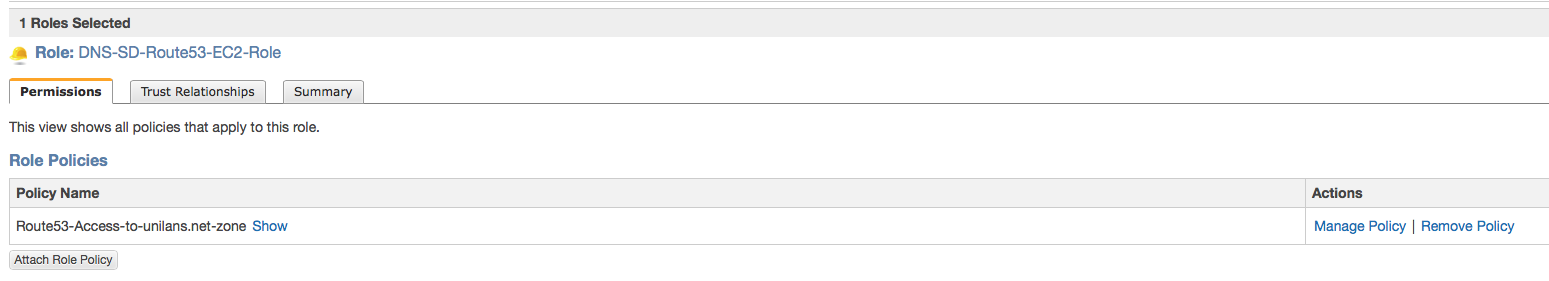

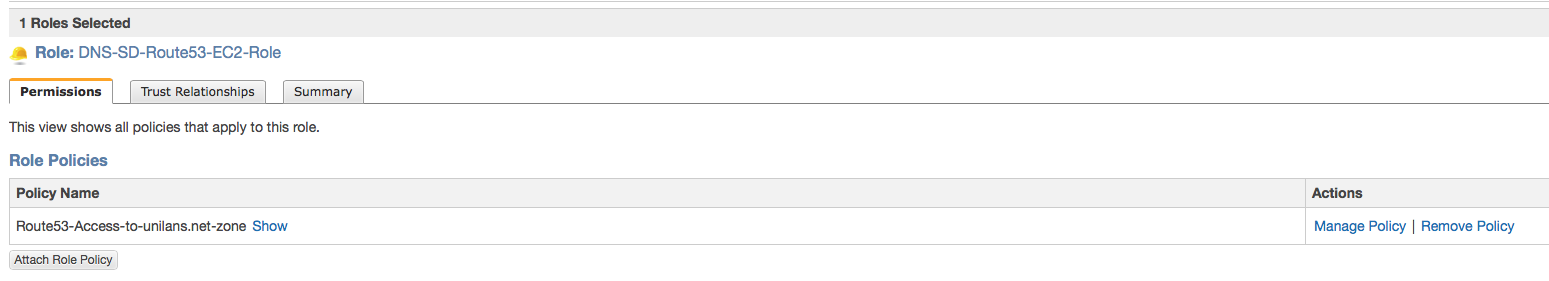

2. Create IAM role for EC2 instances to be able to modify DNS records in desired Zone:

Use the following policy:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

{ "Version": "2012-10-17", "Statement":[ { "Effect":"Allow", "Action":[ "route53:ListHostedZones" ], "Resource":"*" }, { "Effect":"Allow", "Action":[ "route53:GetHostedZone", "route53:ListResourceRecordSets", "route53:ChangeResourceRecordSets" ], "Resource":"arn:aws:route53:::hostedzone/XXXXYYYYZZZZ" }, { "Effect":"Allow", "Action":[ "route53:GetChange" ], "Resource":"arn:aws:route53:::change/*" } ] } |

… where XXXXYYYYZZZZ is your hosted zone ID!

Automated JOIN/LEAVE in service group

Manual settings, outlined in the previous section give the basic framework of the DNS-SD setup. There is no SRV record since there are no active instances providing the service. Ideally, each active service provider will register/de-register with the service when available. This is key here: DNS-SD can be integrated cleanly with the elastic nature of the cloud. Once this integration is at place, all clients will only need to resolve DNS records in order to obtain list of active service providers. For demonstration purposes the following script was created:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 |

#!/usr/bin/env python # The following code modifies AWS Route53 entries to demonstrate usage of DNS-SD in cloud environments # # To JOIN Service group: # dns-sd.py -z unilans.net -s _v1._theservice._tcp.unilans.net. -p 8080 join # # To LEAVE Service group: # dns-sd.py -z unilans.net -s _v1._theservice._tcp.unilans.net. -p 8080 leave # # NOTE: THIS IS FOR DEMONSTRATION PURPOSES ONLY! ERROR HANDLING IS ABSOLUTE MINIMAL! THIS IS *NOT* PRODUCTION CODE! import sys import copy import argparse import requests import boto.route53 def main(): """ Main entry point """ # Parse command line arguments parser = argparse.ArgumentParser(description='Example code to update service records in Route53 hosted DNS zones') parser.add_argument('-z', '--zone', type=str, required=True, dest='zone', help='Zone Name') parser.add_argument('-s', '--service', type=str, required=True, dest='service', help='Service Name') parser.add_argument('-p', '--port', type=int, required=True, dest='port', help='Service Port') parser.add_argument('operation', metavar='OPERATION', type=str, help='Operation [join|leave]', choices=['join', 'leave']) args = parser.parse_args() operation = args.operation zone = args.zone service = args.service port = args.port # Establish connection to Route53 API conn = boto.route53.connection.Route53Connection() # Get zone handler z = conn.get_zone(zone) if not z: print "{progname}: Wrong or inaccessible zone!".format(progname=sys.argv[0]) sys.exit(-1) # Get EC2 Public IP Address response = requests.get('http://169.254.169.254/latest/meta-data/public-ipv4') if response.status_code == 200: public_ipv4 = response.text else: print "{progname}: Unable to obtain public IP address from AWS!".format(progname=sys.argv[0]) sys.exit(-1) # Generate domain-specific hostname fqdn_hostname = '{hostname}.{zone}'.format(hostname=public_ipv4.replace(".", "-"), zone=zone) # Act, based on operation request (join | leave) if operation.upper() == 'join'.upper(): # Create A record z.add_a(fqdn_hostname, public_ipv4, ttl=60) # Obtain service locator records r = z.find_records(service, 'SRV') if not r: # Create SRV record srv_value = u'0 0 {port} {fqdn}'.format(port=port, fqdn=fqdn_hostname) z.add_record('SRV', service, srv_value, ttl=60) else: # Add to SRV record srv_value = u'0 0 {port} {fqdn}'.format(port=port, fqdn=fqdn_hostname) tmp_r = copy.deepcopy(r) tmp_r.resource_records.append(srv_value) z.update_record(r, tmp_r.resource_records) elif operation.upper() == 'leave'.upper(): # Remove entry from the SRV record r = z.find_records(service, 'SRV') if r: tmp_r = copy.deepcopy(r) for record in tmp_r.resource_records: if fqdn_hostname in record: tmp_r.resource_records.remove(record) if len(tmp_r.resource_records) == 0: # Remove the SRV entry itself z.delete_record(r) else: # Update the SRV record z.update_record(r, tmp_r.resource_records) # Remove A record r = z.find_records(fqdn_hostname, 'A') if r: z.delete_record(r) else: print "{progname}: Wrong operation!".format(progname=sys.argv[0]) sys.exit(-1) if __name__ == '__main__': main() |

Copy of the code can be downloaded from https://s3-us-west-2.amazonaws.com/blog.xi-group.com/aws-route53-iam-ec2-dns-sd/dns-sd.py

This code, given DNS zone, service name and service port, will update necessary DNS records to join or leave the service group.

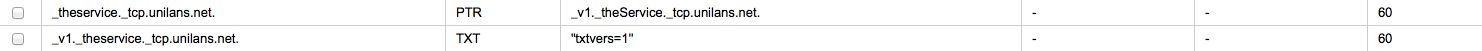

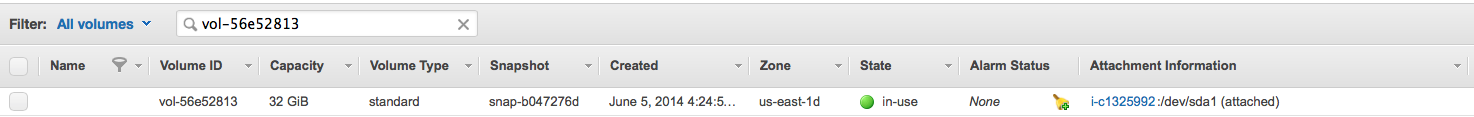

Starting with initial state:

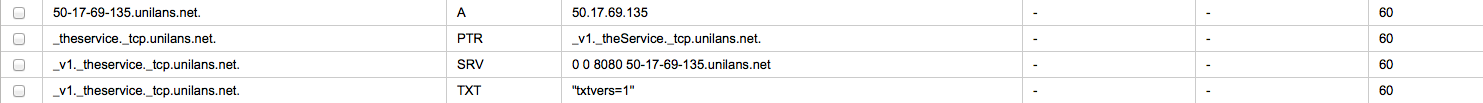

Executing JOIN:

|

|

dns-sd.py -z unilans.net -s _v1._theservice._tcp.unilans.net. -p 8080 join |

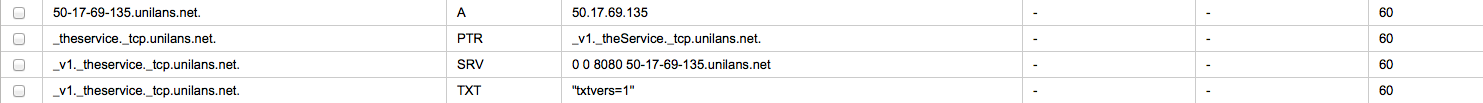

Result:

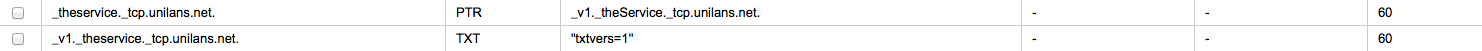

Executing LEAVE:

|

|

dns-sd.py -z unilans.net -s _v1._theservice._tcp.unilans.net. -p 8080 leave |

Result:

Domain-specific hostname is created, service location record (SRV) is created with proper port and hostname. When host leaves the service group, domain-specific hostname is removed, so is the entry in the SRV record, or the whole record if this is the last entry.

Fully automated setup

UserData will be used to fully automate the process. There are many options: Puppet, Chef, Salt, Ansible and all of those can be used, but the UserData solution is with reduced complexity, no external dependencies and can be directly utilized by other AWS Services like CloudFormation, AutoScalingGroups, etc.

The full UserData content is as follows:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 |

#!/bin/bash -ex # Debian apt-get install function apt_get_install() { DEBIAN_FRONTEND=noninteractive apt-get -y \ -o DPkg::Options::=--force-confdef \ -o DPkg::Options::=--force-confold \ install $@ } # Mark execution start echo "STARTING" > /root/user_data_run # Some initial setup set -e -x export DEBIAN_FRONTEND=noninteractive apt-get update && apt-get upgrade -y # Install required packages apt_get_install python-boto python-requests apt_get_install nginx # Create test html page mkdir /var/www cat > /var/www/index.html << "EOF" <html> <head> <title>Demo Page</title> </head> <body> <center><h2>Demo Page</h2></center><br> <center>Status: running</center> </body> </html> EOF # Configure NginX cat > /etc/nginx/conf.d/demo.conf << "EOF" # Minimal NginX VirtualHost setup server { listen 8080; root /var/www; index index.html index.htm; location / { try_files $uri $uri/ =404; } } EOF # Restart NginX with the new settings /etc/init.d/nginx restart # Create dns-sd.py cat > /usr/local/sbin/dns-sd.py << "EOF" #!/usr/bin/env python # The following code modifies AWS Route53 entries to demonstrate usage of DNS-SD in cloud environments # # To JOIN Service group: # dns-sd.py -z unilans.net -s _v1._theservice._tcp.unilans.net. -p 8080 join # # To LEAVE Service group: # dns-sd.py -z unilans.net -s _v1._theservice._tcp.unilans.net. -p 8080 leave # # NOTE: THIS IS FOR DEMONSTRATION PURPOSES ONLY! ERROR HANDLING IS ABSOLUTE MINIMAL! THIS IS *NOT* PRODUCTION CODE! import sys import copy import argparse import requests import boto.route53 def main(): """ Main entry point """ # Parse command line arguments parser = argparse.ArgumentParser(description='Example code to update service records in Route53 hosted DNS zones') parser.add_argument('-z', '--zone', type=str, required=True, dest='zone', help='Zone Name') parser.add_argument('-s', '--service', type=str, required=True, dest='service', help='Service Name') parser.add_argument('-p', '--port', type=int, required=True, dest='port', help='Service Port') parser.add_argument('operation', metavar='OPERATION', type=str, help='Operation [join|leave]', choices=['join', 'leave']) args = parser.parse_args() operation = args.operation zone = args.zone service = args.service port = args.port # Establish connection to Route53 API conn = boto.route53.connection.Route53Connection() # Get zone handler z = conn.get_zone(zone) if not z: print "{progname}: Wrong or inaccessible zone!".format(progname=sys.argv[0]) sys.exit(-1) # Get EC2 Public IP Address response = requests.get('http://169.254.169.254/latest/meta-data/public-ipv4') if response.status_code == 200: public_ipv4 = response.text else: print "{progname}: Unable to obtain public IP address from AWS!".format(progname=sys.argv[0]) sys.exit(-1) # Generate domain-specific hostname fqdn_hostname = '{hostname}.{zone}'.format(hostname=public_ipv4.replace(".", "-"), zone=zone) # Act, based on operation request (join | leave) if operation.upper() == 'join'.upper(): # Create A record z.add_a(fqdn_hostname, public_ipv4, ttl=60) # Obtain service locator records r = z.find_records(service, 'SRV') if not r: # Create SRV record srv_value = u'0 0 {port} {fqdn}'.format(port=port, fqdn=fqdn_hostname) z.add_record('SRV', service, srv_value, ttl=60) else: # Add to SRV record srv_value = u'0 0 {port} {fqdn}'.format(port=port, fqdn=fqdn_hostname) tmp_r = copy.deepcopy(r) tmp_r.resource_records.append(srv_value) z.update_record(r, tmp_r.resource_records) elif operation.upper() == 'leave'.upper(): # Remove entry from the SRV record r = z.find_records(service, 'SRV') if r: tmp_r = copy.deepcopy(r) for record in tmp_r.resource_records: if fqdn_hostname in record: tmp_r.resource_records.remove(record) if len(tmp_r.resource_records) == 0: # Remove the SRV entry itself z.delete_record(r) else: # Update the SRV record z.update_record(r, tmp_r.resource_records) # Remove A record r = z.find_records(fqdn_hostname, 'A') if r: z.delete_record(r) else: print "{progname}: Wrong operation!".format(progname=sys.argv[0]) sys.exit(-1) if __name__ == '__main__': main() EOF # Make dns-sd.py executable chmod +x /usr/local/sbin/dns-sd.py # Create startup job cat > /etc/init.d/dns-sd << "EOF" #! /bin/bash # # Author: Ivo Vachkov (ivachkov@xi-group.com) # ### BEGIN INIT INFO # Provides: DNS-SD Service Group Registration / De-Registration # Required-Start: # Should-Start: # Required-Stop: # Should-Stop: # Default-Start: 2 3 4 5 # Default-Stop: 0 1 6 # Short-Description: Start / Stop script for DNS-SD # Description: Use to JOIN/LEAVE DNS-SD Service Group ### END INIT INFO set -e umask 022 # Configuration details DNS_SD="/usr/local/sbin/dns-sd.py" DNS_ZONE="unilans.net" SERVICE_NAME="_v1._theservice._tcp.unilans.net." SERVICE_PORT="8080" . /lib/lsb/init-functions export PATH="${PATH:+$PATH:}/usr/sbin:/sbin:/usr/bin:/usr/local/bin:/usr/local/sbin" # Default Start function dns_sd_join () { $DNS_SD -z $DNS_ZONE -s $SERVICE_NAME -p $SERVICE_PORT join } # Default Stop function dns_sd_leave () { $DNS_SD -z $DNS_ZONE -s $SERVICE_NAME -p $SERVICE_PORT leave } case "$1" in start) log_daemon_msg "Joining $DNS_ZONE|$SERVICE_NAME:$SERVICE_PORT ... " || true dns_sd_join ;; stop) log_daemon_msg "Leaving $DNS_ZONE|$SERVICE_NAME:$SERVICE_PORT ... " || true dns_sd_leave ;; restart) log_daemon_msg "Restarting ... " || true dns_sd_leave dns_sd_join ;; *) log_action_msg "Usage: $0 {start|stop|restart}" || true exit 1 esac exit 0 EOF # Make /etc/init.d/dns-sd executable chmod +x /etc/init.d/dns-sd # Set automatic execution on start/shutdown update-rc.d dns-sd defaults 99 # Execute initial service group JOIN /etc/init.d/dns-sd start # Mark execution end echo "DONE" > /root/user_data_run |

Copy of the code can be downloaded from https://s3-us-west-2.amazonaws.com/blog.xi-group.com/aws-route53-iam-ec2-dns-sd/userdata.sh

Starting 3 test instances to verify functionality:

|

|

aws ec2 run-instances --image-id ami-018c9568 --count 3 --instance-type t1.micro --key-name test-key --security-groups test-sg --iam-instance-profile Name=DNS-SD-Route53-EC2-Role --user-data file://userdata.sh |

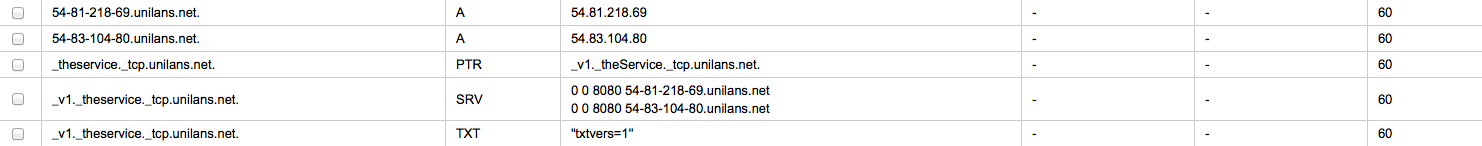

Resulting changes to Route53:

Three new boxes self-registered in the Service group. Stopping manually one leads to de-registration:

Elastic systems are possible to implement with DNS-SD! Note however, that the DNS records are limited to 65536 bytes, so the amount of entries that can go into SRV record, although big, is limited!

Client code

To demonstrate DNS-SD resolution, the following sample code was created:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 |

#!/usr/bin/env python # The following code demonstrates how to resolve DNS-SD Service Descriptions # # Example execution: # client.py -z unilans.net. -s theService -p tcp -v v1 # # NOTE: THIS IS FOR DEMONSTRATION PURPOSES ONLY! ERROR HANDLING IS ABSOLUTE MINIMAL! THIS IS *NOT* PRODUCTION CODE! import sys import random import argparse import requests import dns.resolver def main(): """ Main entry point """ # Parse command line arguments parser = argparse.ArgumentParser(description='Example code to resolve DNS-SD service descriptions') parser.add_argument('-z', '--zone', type=str, required=True, dest='zone', help='Zone Name') parser.add_argument('-s', '--service', type=str, required=True, dest='service', help='Service Name') parser.add_argument('-p', '--protocol', type=str, required=True, dest='protocol', help='Service Transport Protoco [tcp|udp]', choices=['tcp', 'udp']) parser.add_argument('-v', '--version', type=str, required=True, dest='version', help='Service Version') args = parser.parse_args() zone = args.zone service = args.service protocol = args.protocol version = args.version # Obtain PTR Record service_id = '_{service}._{protocol}.{zone}'.format(service=service, protocol=protocol, zone=zone) answer = dns.resolver.query(service_id, 'PTR') # Find the service incarnation if answer: for record in answer.rrset: r = str(record.target).split('.') if version in r[0]: service_version = str(record.target) # Discover and consume the actual service if service_version: # Get SRV and TXT answer_srv = dns.resolver.query(service_version, 'SRV') answer_txt = dns.resolver.query(service_version, 'TXT') service_addr = '' service_port = 0 # If those are valid get random service location entry if answer_srv and answer_txt: srv_entry = random.choice(answer_srv.rrset.items) if srv_entry: service_addr = srv_entry.target service_port = srv_entry.port service_uri = 'http://{host}:{port}/'.format(host=service_addr, port=service_port) r = requests.get(service_uri) if r.status_code == 200: print r.text if __name__ == '__main__': main() |

Copy of the code can be downloaded from https://s3-us-west-2.amazonaws.com/blog.xi-group.com/aws-route53-iam-ec2-dns-sd/client.py

Why would that be better?! Yes, there is added complexity in the name resolution process. But, more importantly, details needed to find the service are agnostic to its location, or specific to the client. Service-specific infrastructure can change, but the client will not be affected, as long as the discovery process is performed.

Sample run:

|

|

:~> client.py -z unilans.net. -s theService -p tcp -v v1 <html> <head> <title>Demo Page</title> </head> <body> <center><h2>Demo Page</h2></center><br> <center>Status: running</center> </body> </html> :~> |

Voilà! Reliable Service Discovery in elastic systems!

Additional Notes

Some additional notes and well-knowns:

-

Examples in this article could be extended to support fail-over or more sophisticated forms of load-balancing. Current random.choice() solution should be good enough for the generic case;

-

More complex setup with different priorities and weights can be demonstrated too;

-

Service health-check before DNS-SD registration can be demonstrated too;

-

Non-HTTP service can be demonstrated to use DNS-SD. Technology is application-agnostic.

-

TXT contents are not used throughout this article. Those can be used to carry additional meta-data (NOTE: This is public! Anyone can query your DNS TXT records with this setup!).

Conclusion

Quick implementation of DNS-SD with AWS Route53, IAM and EC2 was presented in this article. It can be used as a bare-bone setup that can be further extended and productized. It solves common problem in elastic systems: Service Discovery! All key components are implemented in either Python or Shell script with minimal dependencies (sudo aptitude install awscli, python-boto, python-requests, python-dnspython), although the implementation is not dependent on a particular programming language.

References