In everyday life of a DevOps engineer you will have to create multiple pieces of code. Some of those will be run once, others … well others will live forever. Although it may be compelling to just put all the commands in a text editor, save the result and execute it, one should always consider the “bigger picture”. What will happen if your script is run on another OS, on another Linux distribution, or even on a different version of the same Linux distribution?! Another point of view is to think what will happen if somehow your neat 10-line-script has to be executed on say 500 servers?! Can you be sure that all the commands will run successfully there? Can you even be sure that all the commands will even be present? Usually … No!

Faced with similar problems on a daily basis we started devising simple solutions and practices to address them. One of those is the process of standardizing the way different utilities behave, the way they take arguments and report errors. Upon further investigation it became clear that a pattern can be extracted and synthesized in a series of template, one can use in daily work to keep common behavior between different utilities and components.

Here is the basic template used in shell scripts:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 |

#!/bin/sh # # DESCRIPTION: ... Include functional description ... # # Requiresments: # awk # ... # uname # # Example usag: # $ template.sh -h # $ template.sh -p ARG1 -q ARG2 # RET_CODE_OK=0 RET_CODE_ERROR=1 # Help / Usage function print_help() { echo "$0: Functional description of the utility" echo "" echo "$0: Usage" echo " [-h] Print help" echo " [-p] (MANDATORY) First argument" echo " [-q] (OPTIONAL) Second argument" exit $RET_CODE_ERROR; } # Check for supported operating system p_uname=`whereis uname | cut -d' ' -f2` if [ ! -x "$p_uname" ]; then echo "$0: No UNAME available in the system" exit $RET_CODE_ERROR; fi OS=`$p_uname` if [ "$OS" != "Linux" ]; then echo "$0: Unsupported OS!"; exit $RET_CODE_ERROR; fi # Check if awk is available in the system p_awk=`whereis awk | cut -d' ' -f2` if [ ! -x "$p_awk" ]; then echo "$0: No AWK available in the system!"; exit $RET_CODE_ERROR; fi # Check for other used local utilities # bc # curl # grep # etc ... # Parse command line arguments while test -n "$1"; do case "$1" in --help|-h) print_help exit 0 ;; -p) P_ARG=$2 shift ;; -q) Q_ARG=$2 shift ;; *) echo "$0: Unknown Argument: $1" print_help exit $RET_CODE_ERROR; ;; esac shift done # Check if mandatory argument is present? if [ -z "$P_ARG" ]; then echo "$0: Required parameter not specified!" print_help exit $RET_CODE_ERROR; fi # ... # Check if optional argument is present and if not, initialize! if [ -z "$Q_ARG" ]; then Q_ARG="0"; fi # ... # DO THE ACTUAL WORK HERE exit $RET_CODE_OK; |

Nothing fancy. Basic framework that does the following:

- Lines 3 – 13: Make sure basic documentation, dependency list and example usage patterns are provided with the script itself;

- Lines 15 – 16: Define meaningful return codes to allow other utils to identify possible execution problems and react accordingly;

- Lines 18 – 27: Basic help/usage() function to provide the user with short guidance on how to use the script;

- Lines 29 – 52: Dependency checks to make sure all utilities the script needs are available and executable in the system;

- Lines 54 – 77: Argument parsing of everything passed on the command line that supports both short and long argument names;

- Lines 79 – 91: Validity checks of the argument values that should make sure arguments are passed contextually correct values;

- Lines 95 – N: Actual programming logic to be implemented …

This template is successfully used in a various scenarios: command line utilities, Nagios plugins, startup/shutdown scripts, UserData scripts, daemons implemented in shell script with the help of start-stop-daemon, etc. It is also used to allow deployment on multiple operating systems and distribution versions. Resulting utilities and system components are more resilient, include better documentation and dependency sections, provide the user with similar and intuitive way to get help or pass arguments. Error handling is functional enough to go beyond the simple OK / ERROR state. And all of those are important feature when components must be run in highly heterogenous environments such as most cloud deployments!

Related Posts

- UserData Template for Ubuntu 14.04 EC2 Instances in AWS

- How to implement multi-cloud deployment for scalability and reliability

- Small Tip: How to run non-deamon()-ized processes in the background with SupervisorD

- How to deploy single-node Hadoop setup in AWS

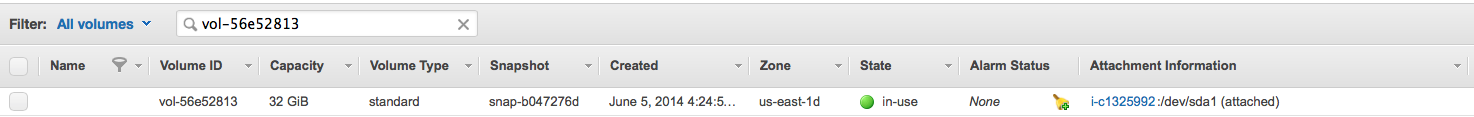

- Small Tip: How to use –block-device-mappings to manage instance volumes with AWS CLI